Technology never makes mistakes – unlike the humans who design it. Who never fail to anticipate the unanticipated.

Perfection issuing from imperfection, reversing the usual order of things.

Sarcasm, in case you didn’t pick up on it.

This 190 proof moonshine – distilled by arrogant technocrats like Elon Musk – is going to get people hurt as automated-driving technology comes online.

I recently test drove a 2018 VW Atlas (review here) which has what several other new cars also have: The ability to partially steer itself, without you doing a thing.

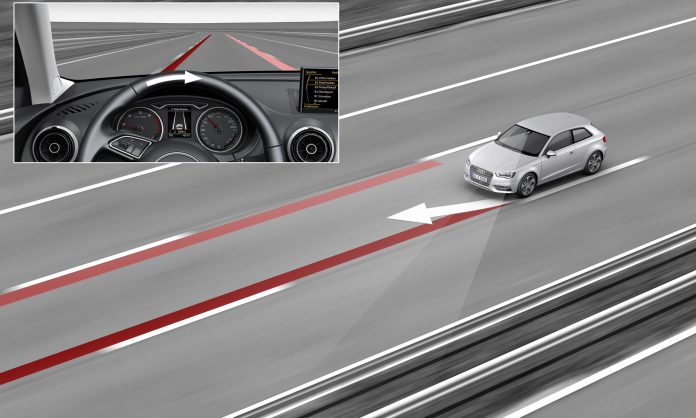

There are hi-res cameras built into the front end of the car that scan the road ahead; they see the painted lines to the left and right and use them as reference points to tell servo-motors when (and how much) to turn the steering wheel to keep the car in between the lines.

It is billed as Lane Keep Assist (or more honestly, Steering Assist) but words don’t change what it does, which is turn the steering wheel for you . . . and sometimes, against you.

Enter the unintended consequence.

The system – which is touted (you knew this was coming) as a saaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaafety aid – is set up such that you must signal before making a lane change, else the semi-self-steering system will perceive this as an affront and fight to prevent you from making a lane change. It feels exactly like the hand of your mother-in-law pulling the steering wheel in the opposite direction. The force applied is not great, but it’s there – and it’s very weird to feel the car countermanding your inputs – or at least, trying to.

But all you have to do is signal to prevent this from happening! The system stops trying to steer against you, then. And shouldn’t one always signal?

As important as courtesy is, one may not have time or presence of mind to do so in an emergency situation. A deer suddenly bounding into your lane, for instance.

Or, a Clover.

Now the car is fighting you – trying to keep you in your lane when remaining in your lane is very likely to result in bent metal.

For a man with strong arms and grip strength, it’s mostly an annoyance – not unlike brushing your annoying mother-in-law’s hand off the wheel. But for a woman or someone without strong arms and a firm grip on the wheel?

The result might be – well, not “safe.”

Remember: Computers aren’t smart. They are programmed. They are not (yet) thinking machines. They are automatons, fed certain operating parameters within which they operate – and that’s it. When a not-anticipated variable crops up, what happens? You may remember the classic ‘60s sci-fi series, Lost in Space and the belligerent, arm-waving robot.

It does not compute!

Exactly.

Humans, on the other hand, think. Or at least, are capable of it. Whether they exercise it is another question, of course. But the point is, they – unlike a computer program – can adjust to deal with an unanticipated variable. They know that signaling is of not much importance when a deer bounds into the path of a car; that swerving – right got-damned now – is of paramount importance.

But the technocrats know better, don’t they?

Of course, they don’t. Being imperfect humans, just like you and I. That is the flaw with their logic. The same flaw that says because humans cannot be trusted to govern themselves, humans must govern them… er…

But the technocrats, Elon Musk being a kind of uber Morlock technocrat, believe they do know best – and (much worse) believe they are our betters and thus we’d better do as we’re told.

And buy what they decree.

They have weighed the risks and run the odds – and if there is a chance that we might be killed as a result of their technocratic arrogance . . . well, omelettes and eggs. We are not to be allowed to make the calculation – and the decision – for ourselves.

Airbags were the first such imposition of the technocratic vision. The evil precedent it set has been expanded upon ever since – and the unintended consequences multiply and compound.

Air bags, when they were first force-fed to us, proved lethal to older/frail people and to children, sensors had to be added to the seats of cars – to detect size and weight – in order to “depower” the in-dash Claymores when a child or older person happened to be sitting in front of one. But now, an annoying buzzer goes off if one sets say a laptop computer or even a bag of take out food on the passenger seat – unless of course one has buckled these items in for safety.

If not, the buzzer goes off – and distracts.

Enervated drivers are not safe drivers.

And what of the millions of extremely not-safe Takata air bags that are shrapnel explosions-waiting-to-happen?

Omelettes and Eggs.

The technocrat vision – the technocratic arrogance. Our lives; their playthings.

I’ve written about the federal mandate requiring all new cars to have roofs sturdy enough to support the entire weight of the car in the event it rolls upside down. Another technocratic befehl. This requires roof supports much thicker than they used to be – which often greatly restricts the driver’s peripheral view, making it less safe to pull out into traffic from a side street.

As this automated driving stuff comes full bloom, things are really going to turn topsy-turvy.

Musk and his acolytes purvey a sanitized, Jetsons-like future of seamless, chic, ultra-efficient and fault-free transportation (note that driving becomes obsolete in this vision). But there will be faults – and unintended consequences, too.

Which we are not going to be consulted about, much less given a choice about.

The trick – for them – is to gull the public into buying into the technocratic Jetson vision so as to make the imposition of the technocratic actuality a fait accompli. The words of Nancy Pelosi come to mind: You have to pass it to find out what’s in it.

Pelosi, of course, knew perfectly well what was in it. So do the technocrats.

Musk especially.

If you like what you’ve found here, please consider supporting EPautos.

We depend on you to keep the wheels turning!

Our donate button is here.

If you prefer not to use PayPal, our mailing address is:

EPautos

721 Hummingbird Lane SE

Copper Hill, VA 24079

PS: EPautos stickers are free to those who send in $20 or more to support the site.

Do you think most new cars come with the technology, but its just disabled because you didn’t pay for it?

Hi C_lover,

Not yet.

Many new cars have or offer things like Lane Keep Assist and automated emergency braking but these are not fully automated technologies… yet.

Teslas are the only cars that I’m aware of which have fully automated driving systems already installed. One more reason to tape that frog-faced Musk’s picture to your dartboard…

Just this past weekend I was driving on an interstate and swerved to avoid a massive pot hole. If I hit it at 70 I probably would have had a blow out. Show me a self steering car that can avoid pot holes, and knows when it is safe, and clear, to do that. By the next day the pot hole was patched.

That is one of those things that a active suspension could easily deal with. Not so much the passive ones on almost all vehicles. On the other hand, it should be dealt with by the department of transportation that drives down all the roads and saw the pothole forming before it became a pothole, if they were doing their job. If not the DOT, if cops aren’t observant enough to spot them forming, how can we rely on them to identify a crime in the making?

I had the displeasure of driving my Father-in-law’s new Volvo XC-90 which has this added “Feature”. I was driving in Louisiana which is notorious for poor roads. Like many two-lane roads I’ve driven on, the highway I was driving down had depressions (valleys) in each lane caused by the tires of cars and repeated travel. The valleys left by the repeated wearing down of the pavement leaves the pavement higher in the middle of the lane and the edges. On this particular day, it had rained leaving standing water in the depressions. I attempted to keep the tires of the Volvo on the drier part of the pavement in my lane to provide more traction. Of course this put the tires closer to the outside white line and thus caused the auto-steering to put the vehicle back to the middle of the lane and the tires into the water. I loathe the repeated addition of “safety” features added to vehicles that I cannot deselect or have to turn off every time I start the vehicle. I’ve even had a dealership cut the wires to the beeping sound caused every time I’m backing up in my 4Runner.

The valleys (or ruts) were caused by the absence of a proper base for the paving, not by traffic, which is the raison d’être for the road.

I remember my first experience with “antistop” brakes. A new 1996 Ford F150. PPL stopped in front of me suddenly. I hit the brakes and got cuthunk,cuthunk,cuthunk. I’m running out of stopping distance. I let off and hit the brakes again. Same thing. I’m about to rear end somebody. I swerve and hit the ditch. They are also awesome when you are pulling a weighted down trailer.

A heavily loaded trailer is much preferable to an empty one, especially if it has a lot of tires on the road.

I have the feeling that if self driving cars ever do make it on the road my local Heroes would still arrest me for DWI as I sit in the rear seat while my car takes me home from the bar.

The money to be made from DWI is just too much to let it go away.

No prosecutor could succeed if you were never proven to occupy the driver’s seat.

Shortly after the federales passed the zero tolerance for those of us “lucky” enough to have Commercial Driver’s Licenses there was a flurry of arrests made on truckers who had returned from a bar on foot or by taxi and got caught sitting in the driver’s seat of their big trucks with the ignition key in the switch. This is one of the most onerous rules that have ever been enforced against CDL holders because it applies to any vehicle, even bicycles. It only takes a sip of beer to flunk the breathalyzer. The only defense, if one is sober, is to demand an immediate blood draw, which will shut down many DUI arrests in most jurisdictions where it isn’t required by law.

A CDL driver can be shut down just by an occifer saying he smelled alcohol on his breath, no tests needed. Of course they won’t ever stop with simply making a guy stay where he is.

As far as self-driving cars, no doubt they’ll require one person sitting in a “drivers” seat since the manufacturers aren’t going to build them unless they have some sort of bye for responsibility and that means a human…..somewhere….and that’s where a “drivers” seat will come in and that will never change.

Another law passed banning firearms in commercial vehicles instantly created an entire industry of victims.

I’ve been driving since I was 15.75 years old. My father taught me to drive and, so far as I know, never got a ticket or was involved in an accident. My teenaged driving ruined that for me, but I can claim the same record for the last 40+ years. I have had a Commercial Driver’s License since 1990, and my 50 months of long-haul trucking in all of the lower 48 showed me human asininity that I could never have imagined existed.

I am torn about driver assist systems. On the one hand, they are utterly predictable, unlike many human drivers. On the other hand, when we rely on them, it can be the last decision we make.

I can’t ignore the fact that drive by wire works better in multi-million dollar aircraft far better than in land vehicles of any price. Boredom is a frequent cause of pilot error in airliner mishaps. If I had a dollar for every time I was woken up by a rumble strip…

All in all, I think that more money spent on effective driver education and traffic law enforcement would pay greater dividends in the end than any amount of consumer vehicle automation.

Relying on self-driving cars, means that peoples’ competence as drivers will fall so that they won’t know what the appropriate response will be in an emergency.

If you want to know where this is going, read ‘Nineteen Eighty-Four’ and ‘Brave New World’.

The gypsy in one of the East -European country have had a perfect description about someone “the stupidest of the stupidest”, They call them: “inginereule”tham means your are an ingeneer !

Case closed !

The only automatic safety “feature” my car has is anti-lock brakes, which I positively despise. Only the government could decide that a good safety feature would be to make hitting the brakes *not* stop the car.

Hi Darien,

ABS was the original/ur idiot-proofing system. Designed to eliminate the need to maintain a safe following distance, maintain a speed you could deal with if it became necessary to panic stop and so on.

The rest was inevitable.

eric, idiot something for sure. The first time my Chevy pickup went into ice skate mode I was lucky and barely missed t-boning an idiot who pulled across in front of me….with me pulling a trailer. Next time it was simply one of those things that make you get out and unhook the damned anti bs. A fool driving it for an inspection plugged it back in since it slid the tires. I forgot about it and it went into the Nancy Kerrigan mode once more and gave me about 20% braking. I hit a new Chevy pickup so hard it caused the lights on that plastic fantastic ’04 model to exit the grill and hang by the wires.

I thought about suing GM but a friend had already has his ass given to him in court when they showed up with 3 lawyers. In Tx. you can get sued back for 3 times the amount if you lose(Republican thing when the shrub was guv). I took my money, rebuilt it and got the insurance to take the “salvage” off the title and made damned sure those brakes were never plugged in again. Inspection in Tx. involves driving the vehicle to make sure it will stop. I now only use a friend with an inspection station who never drives my vehicles or even sits behind the wheel. Good enough.

8,

“(I)nspection station(s) who never drive (the) vehicle or even sits behind the wheel” are common everywhere that inspections are the law, and Texas has far more than any other state because of Austin’s testitis. You have the same problems that all other sub-CDL haulers do. You are using equipment which is sub-standard for the job, and driving like Daisy Duke. Things would be different if such got pulled into pop-up DPS check points.

Darien,

I don’t know what make of vehicle you drive, but the ABS on my E-150 definately stops it faster and straighter than I could in most cases. My objection is to the pedal feel when it engages, and mine is not automatic, I have to push on the brake to engage the ABS.

Here in Michigan we have a saying “don’t veer for deer” Way more injuries and deaths are caused by leaving the roadway. Besides the meat bonus is nice. As long as they’re not near Dow Chemical property.

Besides, if you hit a deer you can practice your venison benison.

We have these things made by RanchHand that will keep an 1100 lb steer or cow from doing any damage to a pickup. Many people in cars and minivans who run at night have the smaller versions that will keep you from getting killed and your vehicle hurt to much of a degree. Of course they’re heavy so they suck some gas but for me and most people here who drive in the dark they’re worth whatever extra gas it costs(diesel mostly). Hogs are a big problem too.

I heard a friend pull up one morning in his Dodge diesel with a RanchHand front end. I noticed a deer head stuck in it. When I pointed it out he said “I heard something when I was looking down but didn’t know what it was”.

This is gonna be fun when the wrist-drivers start buying them. You know, the guys(mostly) that drive down the road with their wrist on top of the steering wheel while yapping on their sail fawn.

Madcap merriment will most assuredly ensue.

Kudos to this article Eric. It furthers my feelings on this topic. In fact, this is the comment I posted in September on a Washington Post article regarding having autonomous-vehicle-only roads in the future:

“I still believe that even by 2076, the only autonomous-vehicle-only lanes will just be an optional portion of roads in mostly urban areas.

Computers can do amazing things that can sometimes exceed a human’s capacity, but they will always be “stupid” in the sense that they cannot think for themselves; they will always follow instructions that ultimately originate from human programming.

There will always be certain scenarios where having a human taking over control of the vehicle is a necessity.”

The idea that you can engineer out human error is a long held fantasy of most in the safety industry. Newer research has shown that this is exactly that, a fantasy. When you engineer out one hazard, you inevitably create new hazards and change how other hazards are manifested. For those interested, I suggest the book “Behind Human Error”, by Woods et.al.

dagnarnit Erric, you be livin in murKKKuh, leader of ´em all. if the robot cars saves one more life than peoples driven, ain’t it werf it?

i mean really? why rail ´gainst all them windmills, chico¿ carpe dime and northern muskie.

this auto spell check/spacer is AWESOME.

https://www.youtube.com/watch?v=TnE5melVBCw

pancho

Eric, I’m wondering how insurance companies (and attorneys) are going to approach truly self-driving cars in terms of liability. When a driverless vehicle causes an accident (which I’m confident WILL happen), whose fault is it? The vehicle’s “driver” (who is actually just a passenger), or the vehicle’s manufacturer? I imagine lawyers are already pondering this and licking their chops. What do you think?

Hi Jef,

Well, first of all, I am certain this is why the speedy, Jetsons-like future promised will never happen. The automated cars will be programmed for hypercaution and operate slowly. Paralyzingly so. I tried to explain this to poster Mark, starry-eyed proponent of automated electric cars.

The one upside is that litigation and liability issues may keep this from ever getting anywhere – technical issues aside!

It may be the garage, that last serviced your car, that is held responsible. I can see this further pushing up servicing costs. Servicing your own car will be outlawed, and older cars will be taken off the road for ‘safety’ reasons. If the manufacturer is held responsible, they will be able to argue for a monopoly on servicing their products. They may not even sell them at all. In the future, it may only be possible to rent, or lease, a ‘self-driving’ car.

It may be the garage, that last serviced your car, that is held responsible. I can see this further pushing up servicing costs. Servicing your own car will be outlawed, and older cars will be taken off the road for ‘safety’ reasons. If the manufacturer is held responsible, they will be able to argue for a monopoly on servicing their products. They may not even sell them at all. In the future, it may only be possible to rent, or lease, a ‘self-driving’ car.

Hi Gordon,

I don’t think it’s coincidental that “mobility” (transportation as a service) is being pushed in tandem with electric/self-driving cars – in part for just the reasons you’ve laid out.

The persons liable ought to be the programmers, the marketeers (for promoting something which isn’t to to the naive), the salesman (for selling something which is not as it is purveyed to be). And if anyone is killed, then THAT is a capital offense…

Si

What I don’t get, why does it have to fight your input? If your inputing (using a computer term), you’re likely not distracted. Isn’t it supposed to be for distracted (aka people not inputing anything), not doing anything?

Its like the spelling and grammar checks when one is writing on a computer. I am amazed how much it messes up what i am writing. Except when it guesses wrong, its kind of a big deal.

Definitely not ready for prime time. I recently purchased an Accura MDX with lane assist. On the ride home from the dealership after purchase I lost control. Seems like I was driving through a construction zone and the ‘computers’ saw barrels on either side of the road with stripes on them. Thought the barrel stripes were road stripes and veered away from them. The car also brakes Hard, when on a two lane highway and another car passes you going in the opposite direction, on a curve. I’m just waiting for the lawsuits. Acura is history over this!!!!

Hi Robert,

It’s not just Acura, either… this Lane Assist scheisse is now standard/optional in prolly half the cars at $30k or above.

Robert –

Are you able to disable this? That’s why I own a 2005 MDX. My car has zero active safety features except for maybe stability control, which I have never used.

Swamp,

Yes you can disable this feature. The problem is that you don’t know how, when you initially drive the car out of the showroom. Steep learning curve on these computers.

Regards

The Lost in Space reference is even more appropriate than you realized to your commentary about safety features backfiring.

In the pilot and first few episodes of the first season, Dr. Smith had programmed the robot to kill the crew as part of his attempt to sabotage the Jupiter II mission. The robot actually said, “Kill! Kill!”

These early episodes were so dark that CBS supposedly told the creator and producer of the series, Irwin Allen, that he was scaring the kids (the intended audience). So he either had to tone things down or face the program being moved to later in the evening—losing that intended audience. And that’s how Dr. Smith evolved from being a murderous menace to being the creepiest and most mincing pseudo-pedophile in any TV series, and how the robot evolved into being the crew’s watchful caretaker instead of its slaughterer.

The robot was supposed to have been in that caretaker role for the Robinson family in the first place. Instead, at least at first, it became something very different. Just like this lane-change “safety” feature…

When I was a kid and first saw “Lost in Space” I wondered why the first episodes were so good and the rest were so crappy. I figured they just cut the budget.

That’s odd it just posted my comment as anonymous it put it in the approval queue. I’ll repeat it and that copy can be deleted. Anyways:

When I was a kid and first saw “Lost in Space” I wondered why the first episodes were so good and the rest were so crappy. I figured they just cut the budget.

Ekramp, this brings up a good point. Who is doing the programing and can it be over ridden?

This sounds like a target rich environment for cyber terrorists. Imagine dozens if not hundreds of fatalities and injuries from deliberately programmed multi self driven car pile ups across the nation. No need to blow yourself up in a car bomb when you can cause destruction from just the car itself and safely from a remote area.

It sounds like someone needs to watch the episode of 60 Minutes where Leslie Stahl experiences brake failure in a remote control car on a test track, which was simply Michael Hastings lite.